Image-to-image translation models can output a high-resolution generated image given a 2D label map as an input.

While many methods have been proposed for generating and manipulating interactively high-quality images in 2D, allowing 3D-aware image synthesis is a very challenging task that the model pix2pix3D introduced in [1] tries to solve.

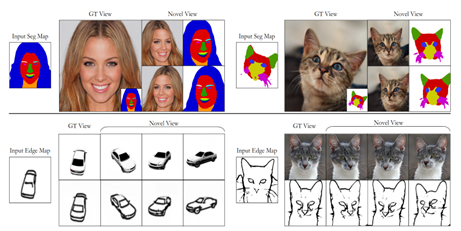

Fig. 1 Given a 2D label map as input, the pix2pix3D model offers a rendered image given any viewpoint.

The 2D label map fed as input to the model can be a segmentation map or an edge map. The proposed pix2pix3D is an interactive tool with which users can edit the label map from different viewpoints and generate outputs accordingly, by synthesizing 3D neural fields.

The training process can be extremely costly, as a large dataset of paired user input and the wanted 3D outputs is needed. The authors solve this challenge by learning 3D representations from only 2D ones, as a task of image reconstruction with adversarial loss.

Author: Gabriela Ghimpeteanu, Coronis Computing.

Bibliography

[1] Deng, Kangle, et al. “3D-aware conditional image synthesis.” CVPR 2023.