The ability to continuously process and retain new information like we do naturally as humans is a feat that is highly sought after when training neural networks.

Unfortunately, the traditional optimization algorithms often require large amounts of data available during training time and updates w.r.t. new data are difficult after the training process has been completed. In fact, when new data or tasks arise, previous progress may be lost as neural networks are prone to catastrophic forgetting. Catastrophic forgetting describes the phenomenon when a neural network completely forgets previous knowledge when given new information.

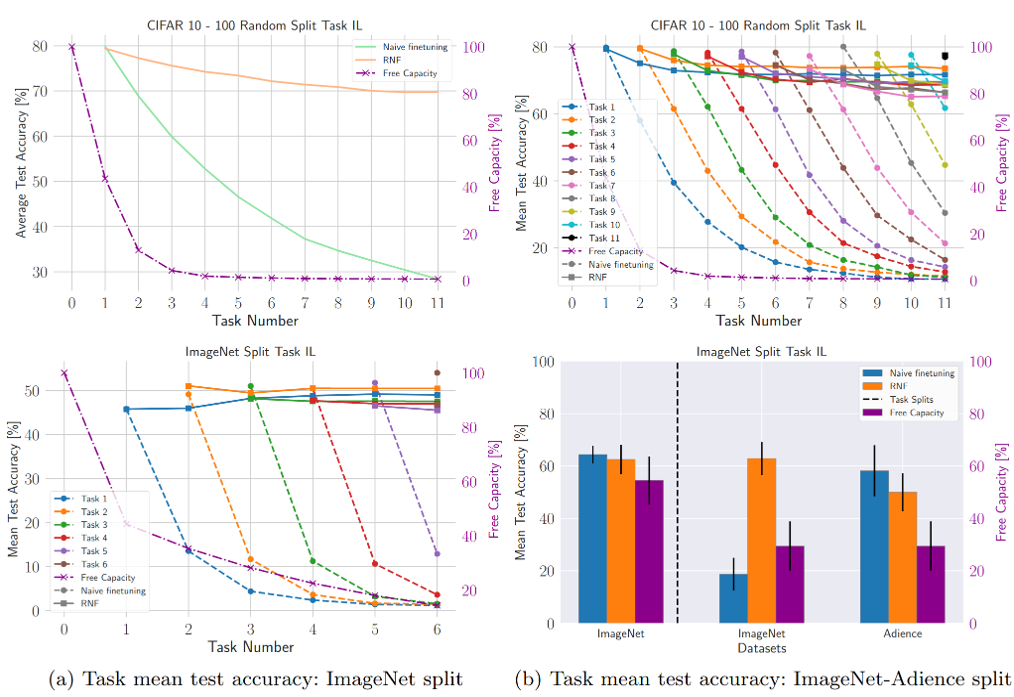

Researchers propose in context of the iToBoS project a novel training algorithm called Relevance-based Neural Freezing (RNF) in which we leverage Layer-wise Relevance Propagation (LRP) in order to retain the information a neural network has already learned in previous tasks when training on new data. The method is evaluated on a range of benchmark datasets as well as more complex data. The method not only successfully retains the knowledge of old tasks within the neural networks but does so more resource-efficiently than other state-of-the-art solutions. Figure 1 shows the effect of RNF in comparison to traditional Neural Network fine-tuning over several consecutive training tasks.

Fig. 1 RNF results in comparison to traditional neural network fine tuning. RNF is able to preserve previously learned knowledge in neural network predictors and allows the model to learn additional information for solving new tasks without forgetting solutions for tasks trained for in the past.

This work [1] extends a successful line of research on the use of Explainable Artificial Intellicenge (XAI) for machine learning model improvement, e.g. for improving neural network efficiency through LRP-based neural network pruning [2] and compression [3], or by improving inference [4], e.g. by fixing Clever Hans behaviour.

Sebastian Lapuschkin, Fraunhofer HHI.

[1] Ede et al., 2022. “Explain to not Forget: Defending Against Catastrophic Forgetting with XAI” Accepted for publication at CD-MAKE 2022, preprint available at https://arxiv.org/abs/2205.01929

[2] Yeom et al., 2021. “Pruning by explaining: A novel criterion for deep neural network pruning” https://www.sciencedirect.com/science/article/pii/S0031320321000868

[3] Becking et al., 2022. “ECQx: Explainability-Driven Quantization of for Low-Bit and Sparse DNNs” https://link.springer.com/chapter/10.1007/978-3-031-04083-2_14

[4] Sun et al., 2022. “Explain and improve: LRP-inference fine-tuning for image captioning models” https://www.sciencedirect.com/science/article/pii/S1566253521001494

[5] Anders et al., 2022. “Finding and removing Clever Hans: Using explanation methods to debug and improve deep models” https://www.sciencedirect.com/science/article/pii/S1566253521001494