It is not clear the allocation in U.S.A. of Clinical Decision Support systems into the category of SaMD (software as medical device).

The Section 3060 of the 21st Century Cures Act excludes from the definition of “medical device” a Clinical Decision Support SW where the system enables medical professional to independently review the basis for the provided recommendation and he/she does not rely on any of such recommendations as the exclusive tool used to make a clinical diagnosis or treatment decision”.

The interpretation of this definition is not so clear. Some examples: a SW that matches patient diagnoses with published reference literature to recommend a treatment would not be considered a “medical device” since a physician could reasonably come to that conclusion on his or her own with some manual work. On the contrary higher level tools that use big data analytics (including imaging analytics and predictive analytics, and make recommendation in terms of diagnostics will be considered as “medical device” and will require an extra level of review and approval. FDA has not yet provided a clear line between the first case and the second one.

In this scenario Artificial Intelligence and Machine Learning represent one of the biggest challenges for Regulatory Bodies. They have peculiar characteristics:

- The continuously learning approach and the continuous adaptation of machine-learning algorithms making questionable a pre-market validation by the Regulatory Bodies[1];

- The need of a massive quantity of “sensitive data” for training and validation that has to match with the new, more stringent rules related to data protection and privacy defined by GDPR in Europe and HIPAA in USA;

- The “opacity” of machine learning used in diagnostic applications (“the black box” issue in medicine)

- conflicting with the accepted principle in healthcare diagnostics that medical professionals must have the capability of independently reviewing the basis for the recommendations proposed by the technology;

- posing some legal questions about malpractice and liability.

These characteristics often collide with current regulations that are based on medical devices that are “fixed” in design and do not change often. The challenge is how to regulate products that are in a constant state of evolution.

It is worthwhile to notice that currently there are already several AI-based SaMDs approved by FDA (510 (k) clearance or “de novo” process) but those products are all based on “locked algorithms” i.e. they do not fully unleash the potential of AI (to learn based on new data, to adapt).

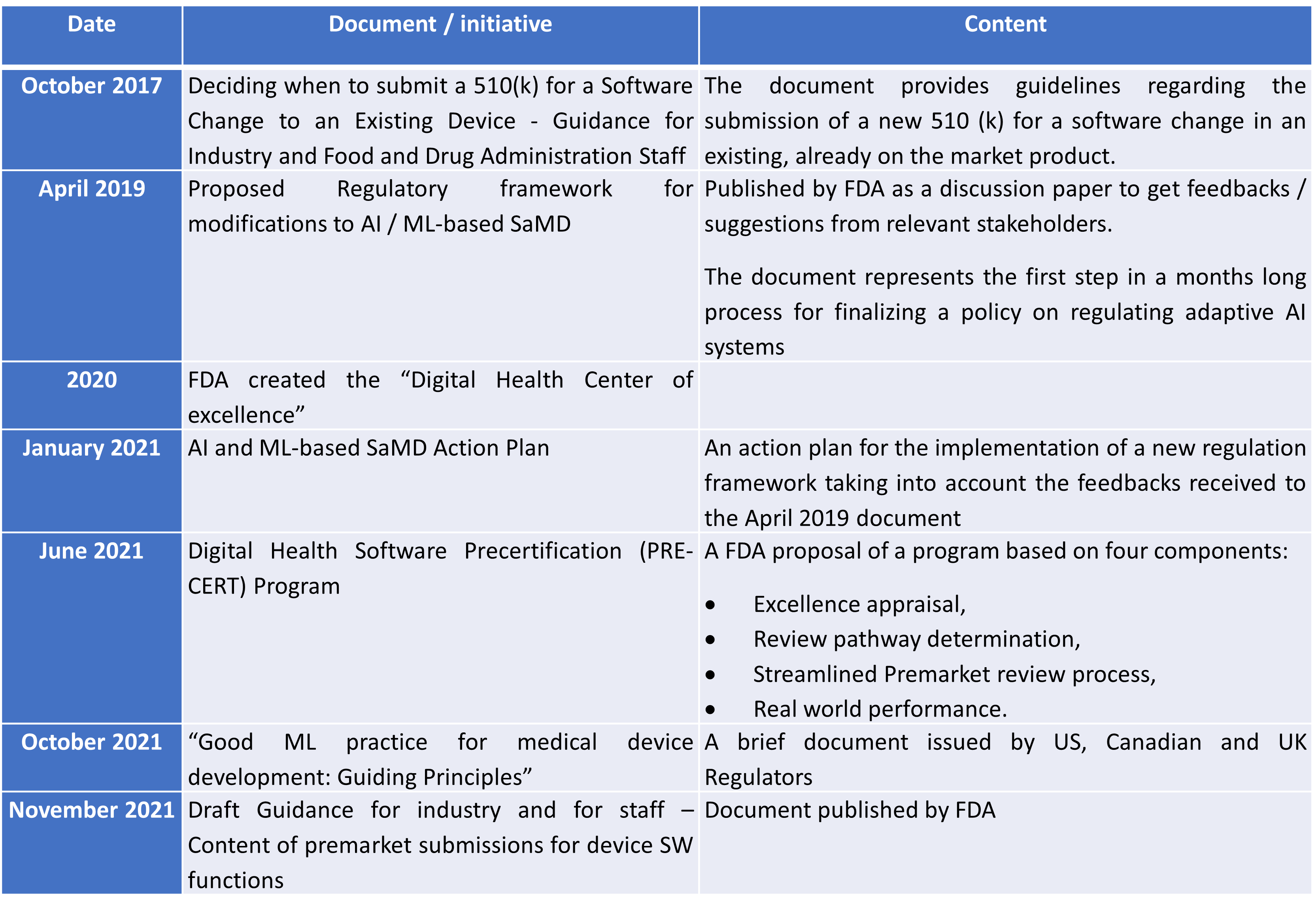

A summary of the FDA’s steps towards a new regulatory framework

The following table summarizes the main documents and initiatives in U.S.A. towards the implementation of a new regulatory framework taking into account the characteristics of AI & ML-based SaMDs.

Steps towards a new regulatory framework for SaMDs

In all documents it is highlighted the key objective of FDA i.e. to maintain reasonable assurance of safety and effectiveness of AI/ML-based SaMD, while allowing the software to continue to learn and evolve over time to improve patient care.

Figuring out how to embrace the adaptive nature of AI- and ML- powered devices while not hampering the advancement of the technology will be a challenge and will require compromise on both sides.

Scott Gottlieb, FDA’s Commissioner, noted that “AI holds enormous promise for the future of medicine” and that “we must also recognize that FDA’s usual approach to medical product regulation is not always well suited to emerging technologies like digital health, or the rapid pace of change in this area. If we want American patients to benefit from innovation, FDA itself must be as nimble and innovative as the technologies we’re regulating.”

[1] Among the challenges of adaptive SW: a. how prevent / diminish / measure data biases ? b. How can we be sure that training data is inclusive of diverse populations? c. Is there anything a patient or user can do that would interfere with the algorithm?