Data pipelining is a process that involves moving data from one system to another or from one application to another in a controlled and automated way.

The data pipeline typically consists of a series of data processing steps, which are connected to form a workflow. The purpose of data pipelining is to ensure that data is available in the right format, at the right time, and in the right place to support various data-driven applications. This procedure is critical for ingestion, harmonization and preprocessing of DICOM images, clinical (tabular), genetic, and other forms of data that the iToBoS AI Cognitive Assistant handles.

Some popular frameworks for data pipelining and data ETL (Extract, Transform, Load) operations are Apache Airflow, Luigi and Apache NiFi. Apache Airflow is an open-source data orchestration framework that enables users to define, schedule, and monitor complex workflows. Airflow is built around the concept of directed acyclic graphs (DAGs), which describe the dependencies between individual tasks. Workflows can be defined using Python code, which can be easily maintained and version controlled. Airflow also provides a web interface that allows users to monitor the status of their workflows and visually inspect the data flow.

Luigi is another framework that can be used for data ETL (Extract, Transform, Load) operations. In fact, Luigi is specifically designed for building data pipelines, and ETL is one of its primary use cases. With Luigi, tasks can be defined that extract data from various sources, transform it using Python code, and load it into a target system. Luigi provides a powerful framework for defining and scheduling tasks, allowing to build complex pipelines that consider dependencies between tasks, and suitably handle errors and exceptions. It offers a web-based interface for visualizing ETL pipelines, facilitating monitoring of the progress and identification of potential bottlenecks and errors.

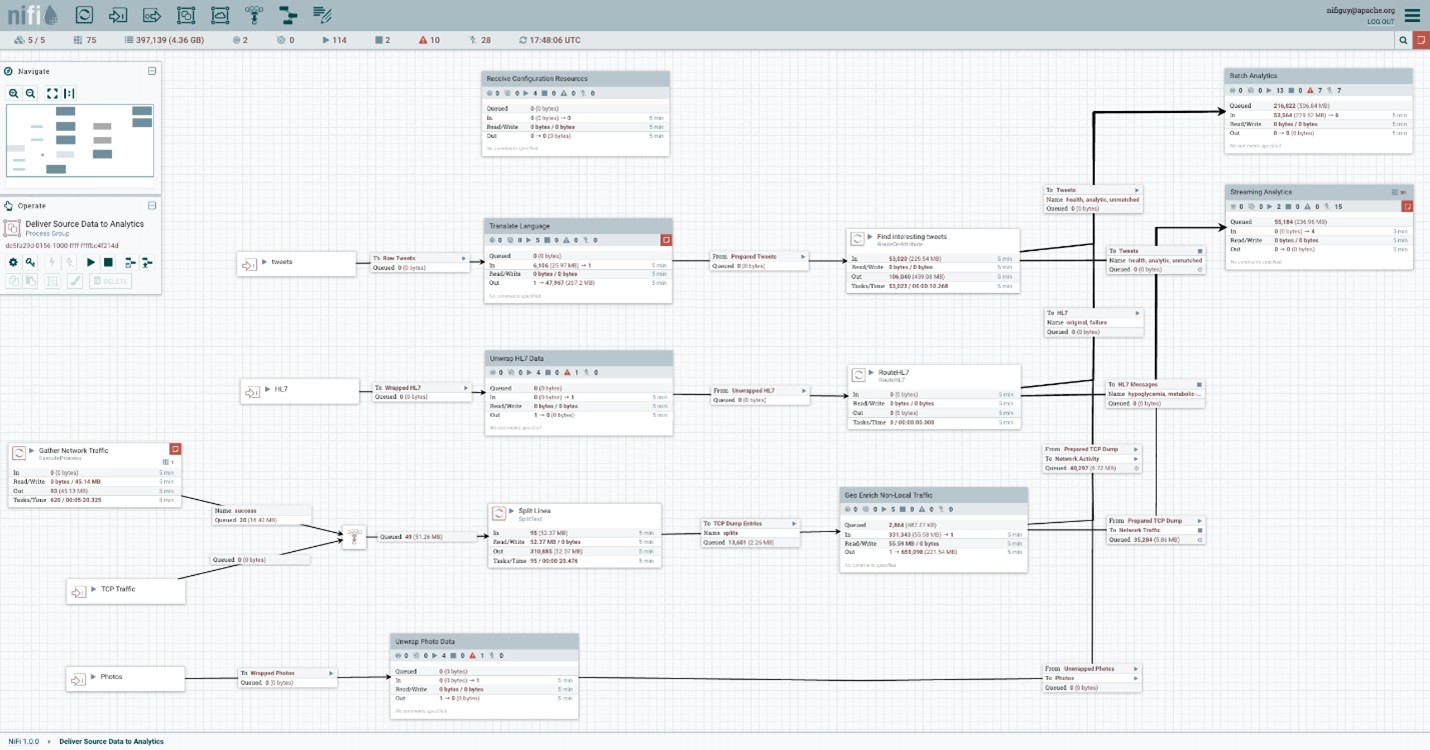

Apache NiFi is another framework specifically designed for data ingestion, processing, and distribution, making it an ideal tool for ETL pipelines. NiFi, can easily manage various data sources, including databases, APIs, files, and streaming sources. It offers built-in processors for applying different transformations and enrichments to the data, like filtering, splitting, merging, and joining. NiFi can track the lineage of every data flow, allowing to trace where the data came from and how it was transformed, and offers also flow control via conditions, loops, and prioritization. The framework also provides several features for securing data, including encryption, authentication, and access control, features that are crucial for protecting sensitive data, like the ones treated in iToBoS, during ETL operations. NiFi provides a powerful visual interface that allows to easily design and monitor ETL pipelines.

Overall, compared to Apache Airflow and Luigi, Apache NiFi is better suited for real-time data processing and has a more user-friendly interface. For this reason, ETL operations of the iToBoS AI cognitive assistant is based on Apache NiFi which is hosted on the iToBoS cloud.

Figure 1: Screenshot from Apache NiFi (source: Wikimedia Commons)